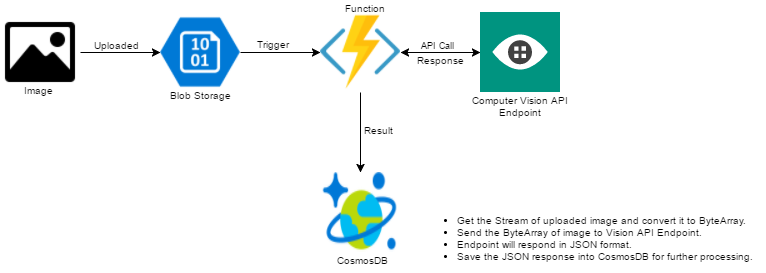

I started to work on a project which is a combination of lot of intelligent APIs and Machine Learning stuff. One of the things I have to accomplish is to extract the text from the images that are being uploaded to the storage. To accomplish this part of the project I planned to use Microsoft Cognitive Service Computer Vision API. Here is the extract of it from my architecture diagram.

Let’s get started by provisioning a new Azure Function.

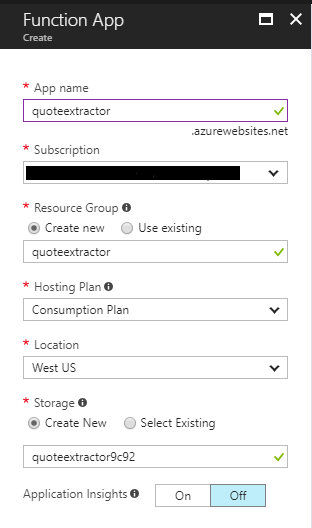

I named my Function App as quoteextractor and selected the Hosting Plan as Consumption Plan instead of App Service Plan. If you choose Consumption Plan you will be billed only what you use or whenever your function executes. On the other hand, if you choose App Service Plan you will be billed monthly based on the Service Plan you choose even if your function executes only few times a month or not even executed at all. I have selected the Location as West US because the Cognitive Service subscription I have has the endpoint for the API from West US. I then also created a new Storage Account. Click on Create button to create the Function App.

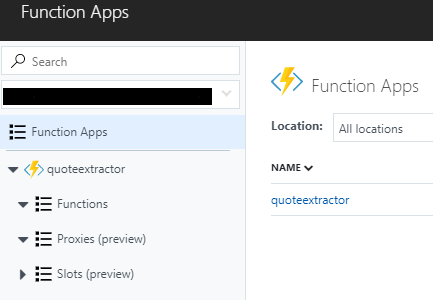

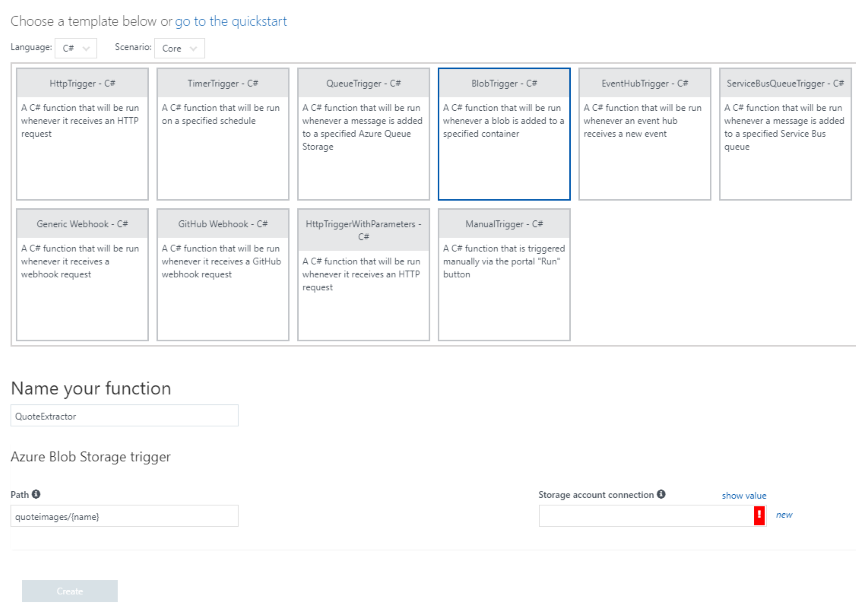

After the Function App is created successfully, create a new function in the Function App quoteextractor. Click on the Functions on the left hand side and then click New Function on the right side window to create a new function as shown in the below screenshot.

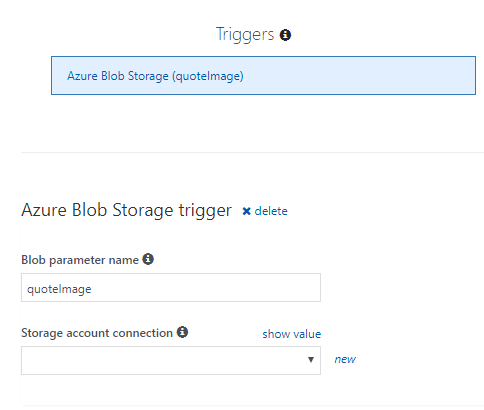

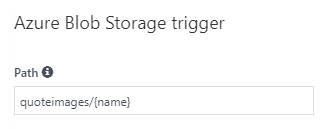

The idea is to trigger the function whenever a new image is added/uploaded to the blob storage. To filter down the list of templates, select C# as the Language and then select BlobTrigger - C# from the template list. I have also change the name of the function to QuoteExtractor. I have also changed the Path parameter of Azure Blob Storage trigger to have quoteimages. The quoteimages is the name of the container where the function will bind itself and whenever a new image or item is added to the storage it will get triggered.

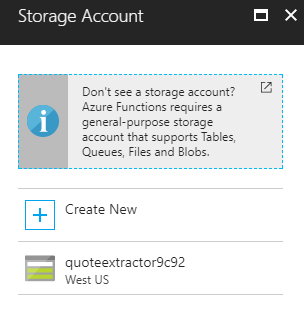

Now change the Storage account connection which is basically a connection string for your storage account. To create a new connection click on the new link and select the storage account you want your function to get associated with. The storage account I am selecting is the same one which got crated at the time of creating the Function App.

Once you select the storage account, you will be able to see the connection key in the Storage account connection dropdown. If you want to view the full connection string then click the show value link.

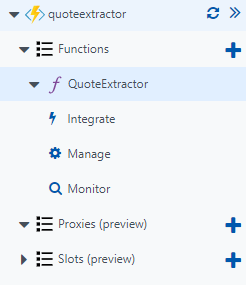

Click the Create button to create the function. You will now be able to see your function name under the Functions section. Expand your function and you will see three other segments named Integrate, Manage and Monitor.

Click on Integrate and under Triggers update the Blob parameter name from myBlob to quoteImage or whatever the name you feel like having. This name is important as this is the same parameter I will be using in my function. Click Save to save the settings.

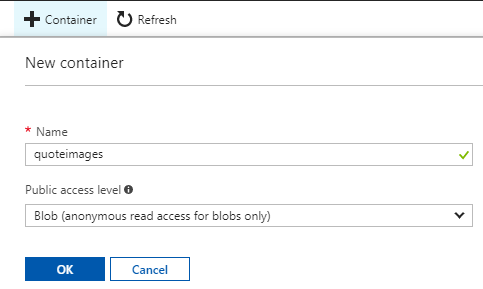

The storage account which is created at the time of creating the Function App, still does not have a container. Add a container with the name which is used in the path of the Azure Blob Storage trigger which is quotesimages.

Make sure you select the Public access level to Blob. Click OK to create a container in your storage account.

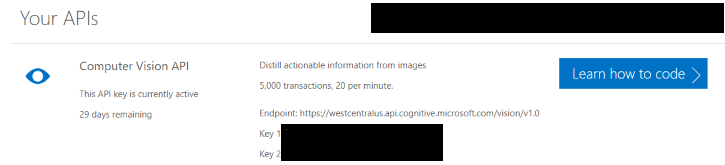

With this step, you should be done with all the configuration which are required for Azure Function to work properly. Now let’s get the Computer Vision API subscription. Go to https://azure.microsoft.com/en-in/try/cognitive-services/ and under Vision section click Get API Key which is next to Computer Vision API. Agree with all the terms and conditions and continue. Login with any of the preferred account to get the subscription ready. Once everything went well, you will see your subscription key and endpoint details.

The result which I am going to get as a response from the API is in JSON format. Either I can parse the JSON request in the function itself or I can save it directly in the CosmosDB or any other persistent storage. I am going to put all the response in CosmosDB in raw format. This is an optional step for you as the idea is to see how easy it is to use Cognitive Services Computer Vision API with Azure Functions. If you are skipping this step, the you have to tweak the code a bit so that you can see the response in the log window of your function.

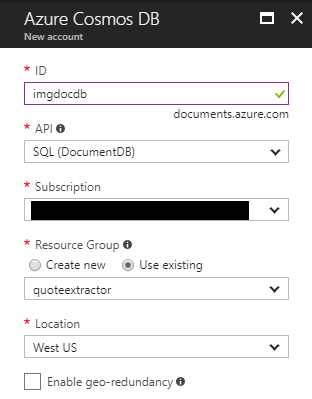

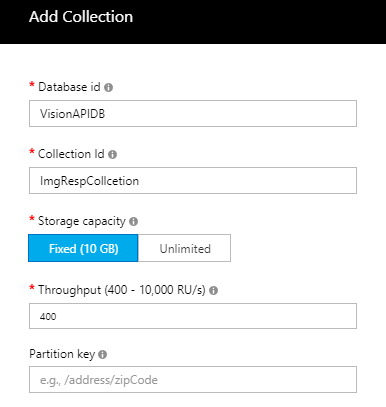

Provision a new CosmosDB and add a collection to it. By default there is a database named ToDoList and with a collection called Items that you can create soon after provisioning of the CosmosDB from the getting started page. If you want you can also create a new database and add a new collection which make more sense.

To create a new database and collection go to Data Explorer and click on New Collection. Enter the details and click OK to create a database and a collection.

Now we have everything ready. let’s get started with the CODE!!.

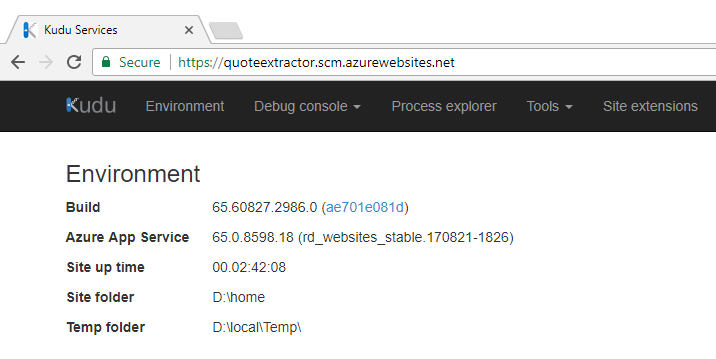

Let’s start by creating a new function called SaveResponse which takes the API response as one of the parameters and is of type string. In this method I am going to use the Azure DocumentDB library to communicate with CosmosDB. This means I have to add this library as a reference to my function. To add this library as a reference, navigate to the KUDU portal by adding scm in the URL of your function app like so: https://quoteextractor.scm.azurewebsites.net/. This will open up the KUDU portal.

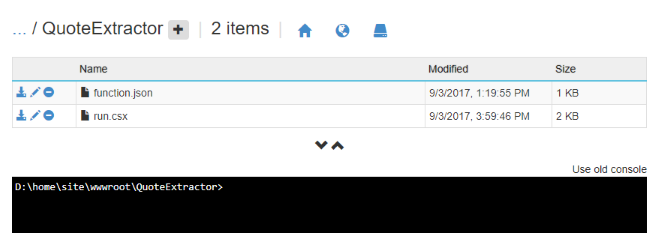

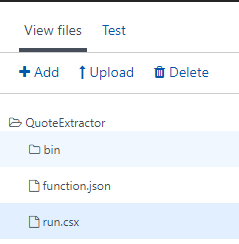

Go to Debug console and click CMD. Navigate to site/wwwroot/<Function Name>. In the command window use the command mkdir to create a folder name bin.

mkdir bin

You can now see the bin folder in the function app directory. Click the bin folder and upload the lib(s).

In the very first line of the function use #r directive to add the reference of external libraries or assemblies. Now you can use this library in the function with the help of using directive.

#r "D:\home\site\wwwroot\QuoteExtractor\bin\Microsoft.Azure.Documents.Client.dll"

Right after adding the reference of the DocumentDB assembly, add namespaces with the help of using directive. In the later stage of the function, you will also need to make HTTP POST calls to the API endpoint and therefore make use of the System.Net.Http namespace as well.

using Microsoft.Azure.Documents; using Microsoft.Azure.Documents.Client; using System.Net.Http; using System.Net.Http.Headers;

The code for the SaveResponse function is very simple and just make use of the DocumentClient class to create a new document for the response we receive from the Vision API. Here is the complete code.

private static async Task<bool> SaveResponse(string APIResponse)

{

bool isSaved = false;

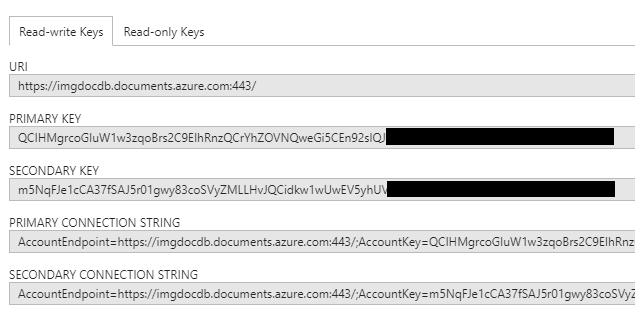

const string EndpointUrl = "https://imgdocdb.documents.azure.com:443/";

const string PrimaryKey = "QCIHMgrcoGIuW1w3zqoBrs2C9EIhRnxQCrYhZOVNQweGi5CEn94sIQJOHK3NleFYDoFclB7DwhYATRJwEiUPag==";

try

{

DocumentClient client = new DocumentClient(new Uri(EndpointUrl), PrimaryKey);

await client.CreateDocumentAsync(UriFactory.CreateDocumentCollectionUri("VisionAPIDB", "ImgRespCollcetion"), new {APIResponse});

isSaved = true;

}

catch(Exception)

{

//Do something useful here.

}

return isSaved;

}

Notice the database and collection name in the function CreateDocumentAsync where the CreateDocumentCollectionUri will return the endpoint uri based of the database and collection name. The last parameter new {ApiResponse} is the response that is received from the Vision API.

Create a new function and call it ExtractText. This function will take Stream object of the image that we can easily get from the Run function. This method is responsible to get the stream object and convert it into byte array with the help of another method ConvertStreamToByteArray and then send the byte array to Vision API endpoint to get the response. Once the response is successful from the API, I will save the response in CosmosDB. Here is the complete code for the function ExtractText.

private static async Task<string> ExtractText(Stream quoteImage, TraceWriter log)

{

string APIResponse = string.Empty;

string APIKEY = "b33f562505bd7cc4b37b5e44cb2d2a2b";

string Endpoint = "https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/ocr";

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

Endpoint = Endpoint + "?language=unk&detectOrientation=true";

byte[] imgArray = ConvertStreamToByteArray(quoteImage);

HttpResponseMessage response;

try

{

using(ByteArrayContent content = new ByteArrayContent(imgArray))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(Endpoint, content);

APIResponse = await response.Content.ReadAsStringAsync();

log.Info(APIResponse);

//TODO: Perform check on the response and act accordingly.

}

}

catch(Exception)

{

log.Error("Error occured");

}

return APIResponse;

}

There are few things in the above function which need out attention. You will get the subscription key and the endpoint when you register for the the Cognitive Services Computer Vision API. Notice the endpoint I am using also had ocr in the end which is important as I want to read the text from the images I am uploading to the storage. The other parameters I am passing is the language and detectOrientation. Language has the value as unk which stands for unknown which in turn tells the API to auto-detect the language and detectOrientation checks text orientation in the image. The API respond in JSON format which this method returns back in the Run function and this output becomes the input for SaveResponse method. Here is the code for ConvertStreamtoByteArray metod.

private static byte[] ConvertStreamToByteArray(Stream input)

{

byte[] buffer = new byte[16*1024];

using (MemoryStream ms = new MemoryStream())

{

int read;

while ((read = input.Read(buffer, 0, buffer.Length)) > 0)

{

ms.Write(buffer, 0, read);

}

return ms.ToArray();

}

}

Now comes the method where all the things will go into, the Run method. Here is how the `Run` method looks like.

public static async Task<bool> Run(Stream quoteImage, string name, TraceWriter log)

{

string response = await ExtractText(quoteImage, log);

bool isSaved = await SaveResponse(response);

return isSaved;

}

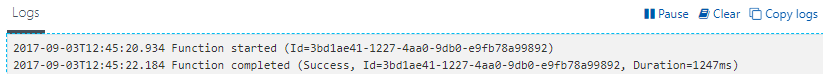

I don’t think this method needs any kind of explanation. Let’s execute the function by adding a new image to the storage and see if we are able to see the log in the Logs window and a new document in the CosmosDB. Here is the screenshot of the Logs window after the function gets triggered when I uploaded a new image to the storage.

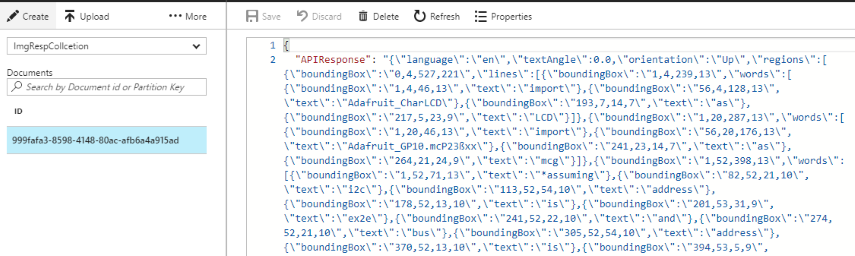

In CosmosDB, I can see a new document added to the collection. To view the complete document navigate to Document Explorer and check the results. This is how my view looks like:

To save you all a little time, here is the complete code.

#r "D:\home\site\wwwroot\QuoteExtractor\bin\Microsoft.Azure.Documents.Client.dll"

using System.Net.Http.Headers;

using System.Net.Http;

using Microsoft.Azure.Documents;

using Microsoft.Azure.Documents.Client;

public static async Task<bool> Run(Stream quoteImage, string name, TraceWriter log)

{

string response = await ExtractText(quoteImage, log);

bool isSaved = await SaveResponse(response);

return isSaved;

}

private static async Task<string> ExtractText(Stream quoteImage, TraceWriter log)

{

string APIResponse = string.Empty;

string APIKEY = "b33f562505bd7cc4b37b5e44cb2d2a2b";

string Endpoint = "https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/ocr";

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

Endpoint = Endpoint + "?language=unk&detectOrientation=true";

byte[] imgArray = ConvertStreamToByteArray(quoteImage);

HttpResponseMessage response;

try

{

using(ByteArrayContent content = new ByteArrayContent(imgArray))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(Endpoint, content);

APIResponse = await response.Content.ReadAsStringAsync();

//TODO: Perform check on the response and act accordingly.

}

}

catch(Exception)

{

log.Error("Error occured");

}

return APIResponse;

}

private static async Task<bool> SaveResponse(string APIResponse)

{

bool isSaved = false;

const string EndpointUrl = "https://imgdocdb.documents.azure.com:443/";

const string PrimaryKey = "QCIHMgrcoGIuW1w3zqoBrs2C9EIhRnzZCrYhZOVNQweIi5CEn94sIQJOHK2NkeFYDoFcpB7DwhYATRJwEiUPbg==";

try

{

DocumentClient client = new DocumentClient(new Uri(EndpointUrl), PrimaryKey);

await client.CreateDocumentAsync(UriFactory.CreateDocumentCollectionUri("VisionAPIDB", "ImgRespCollcetion"), new {APIResponse});

isSaved = true;

}

catch(Exception)

{

//Do something useful here.

}

return isSaved;

}

private static byte[] ConvertStreamToByteArray(Stream input)

{

byte[] buffer = new byte[16*1024];

using (MemoryStream ms = new MemoryStream())

{

int read;

while ((read = input.Read(buffer, 0, buffer.Length)) > 0)

{

ms.Write(buffer, 0, read);

}

return ms.ToArray();

}

}