My team at Microsoft is responsible for building tools that enhance customer experience when you contact Microsoft support. Although our tools are never used by customers directly but they play an essential role in improving the experience and overall cost reductions. This means that our work is critical for the overall success and it should perform under variance of load, especially on holidays.

Problem Statement

Team wants to test their application from different geographies and mark the metrics used.

I am not the primary team member leading the load testing project but it interests me a lot on how the other team is going to achieve this. Although they have a different plan to get this done, I came up with my own simple but useful way of getting these tests done via Azure Container Instances.

To accomplish this, I plan to use K6 load testing tool which is built specifically for engineering teams and by well-known Grafana Labs. I need to setup Azure Container Instances with K6 image and configure it with Azure File Share which acts as a volume mount.

Setting up resources in Azure

I will start up by setting a Resource Group in Azure and I will name it cannon. You can name it anything you want.

I am using Azure CLI aka AZ CLI. If you have not setup AZ CLI for Azure, then I strongly suggest you do so. If you still choose not to use this, then you can still use Azure Portal, Terraform etc. to create these resources.

Create a new Resource Group

$ az group ceate --name cannon --location eastus

Create a new Azure Storage Account

I need an Azure Storage account because File Share service is a part of Azure Storage.

The below command will create a new Azure Storage account inside the resource group cannon with Performance tier as Standard and Redundancy as Locally-redundant storage. You can change these values as per your need.

$ az storage account create --name geoloadteststore \ --resource-group cannon \ --location eastus \ --sku Standard_LRS

Create a new File Share

The below command will create a new file share which I will use as a volume mount for my Azure Container Instance.

az storage share create \ --account-name geoloadteststore \ --name loadtestresults \ --quota 1

In the above command, account-name and name are mandatory, quota is a non-mandatory parameter, but I have used it to ensure that the share size is not set to default which is 5TB. Setting quota as 1 will set the size of my share to be 1GB.

Create and run Azure Container Instance

When I create a new Azure Container Instance, it will use an image to create a container and run it. This means that I don’t have to run the container explicitly unless there is an error.

Here is an AZ command that will create a new Azure Container Instance and execute the load test using K6.

az container create -n k6demo \ --resource-group cannon \ --location eastus --image grafana/k6:latest \ --azure-file-volume-account-name geoloadteststore \ --azure-file-volume-account-key RhGutivQKlz5llXx9gPxM/CP/dlXWLw5x6/SHyCl+GtLZeRp9cAYEByYTo3vL2EFAy0Nz0H+n1CV+AStTNGEmA== \ --azure-file-volume-share-name loadtestresults \ --azure-file-volume-mount-path /results \ --command-line "k6 run --duration 10s --vus 5 /results/tests/script_eastus.js --out json=/results/logs/test_results_eastus.json" \ --restart-policy Never

The above command has lots of details and few parts of it require some good attention. For most of the part, things are simple to understand. The image that I am using is provided to us by Grafana from their verified Docker Hub account. I then use the Azure File share information to setup the volume mount.

The important part here is the way the volume mount is used. The --azure-file-volume-mount-path has the value /results which will be a mount path in the container. This means that you don’t have to create a folder named results in the file share. If you take your attention to the next parameter --command-line, you can see that the K6 test script is being read from /result/tests folder and output of the command is stored in /results/logs. If you wish, you can also use a full path of a blob storage or even an S3 bucket to read your script file.

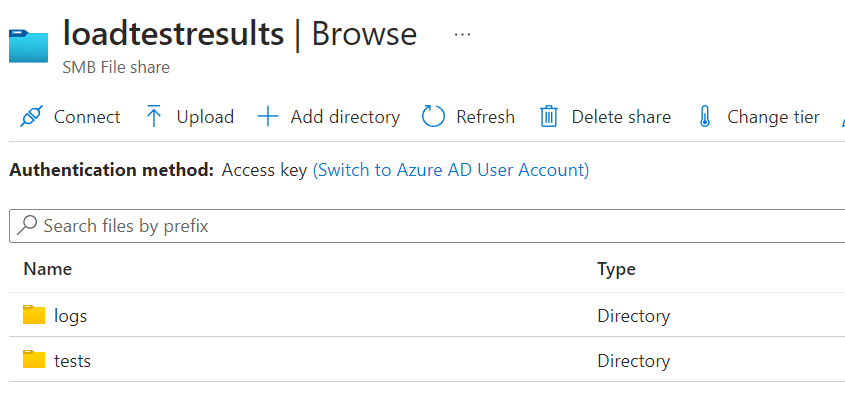

Navigate to the file share in Azure Portal and create 2 folders named logs and tests.

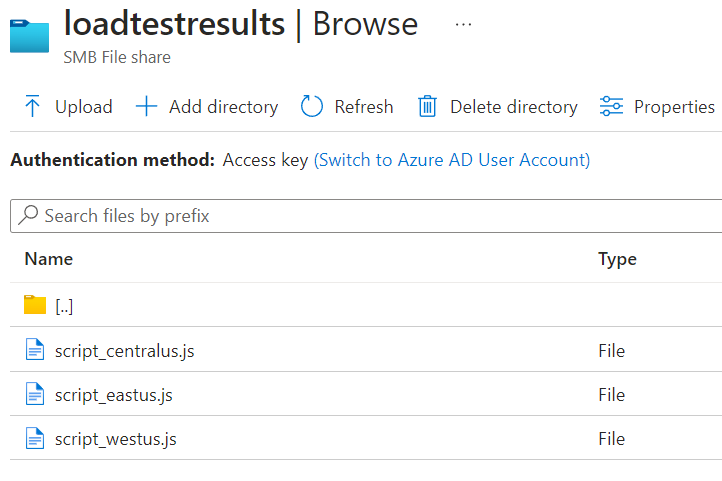

Inside the tests folder, add a K6 script file you have created. I have created multiple script files for different locations.

For this example, I am using a demo script with some changes to it. Note the change in the name of the summary file. It contains the location name for easy identification of logs.

import http from "k6/http";

import { sleep } from "k6";

export default function () {

http.get("https://test.k6.io");

sleep(1);

}

export function handleSummary(data) {

return {

"/results/logs/summary_eastus.json": JSON.stringify(data), //the default data object

};

}

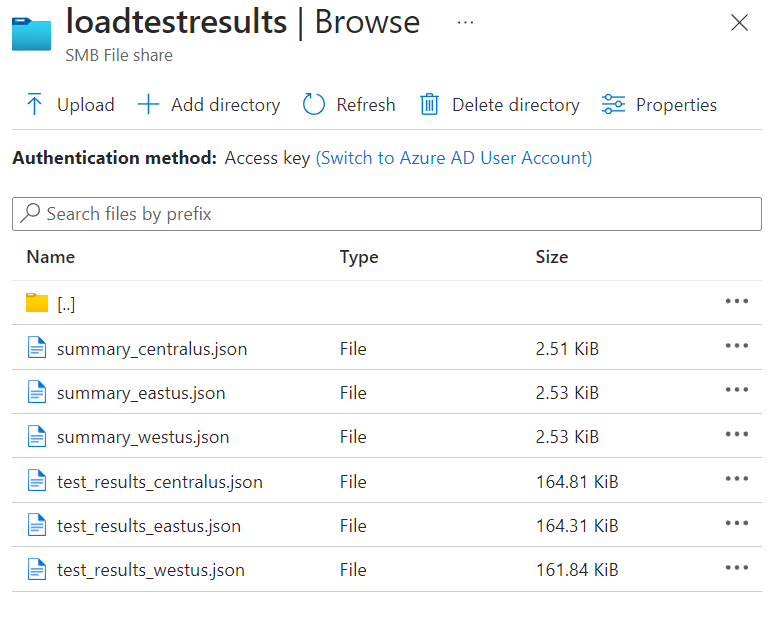

As per K6 documentation, I have added an additional function named handleSummary. This function will generate the summary for the entire test and save it in the JSON format which later I can use for visualization. This is the same output you will see when you run the K6 on your console. The other file which is referenced in the command above called test_results_eastus.json will have details about every test run.

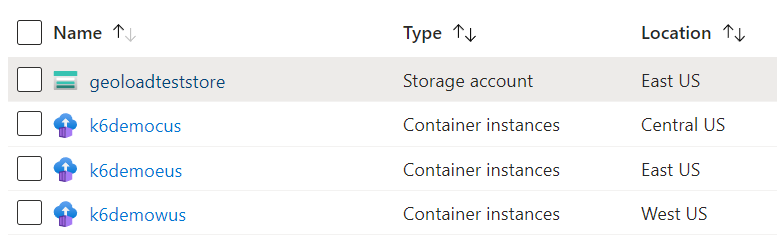

I execute the az container create command three times for 3 different regions.

I have 3 Azure Container Instances which ran by K6 load test for 3 different locations. You can also see the location for each Azure Container Instance in the above screenshot. After the load test is finished, I can now view my JSON logs in the file share.

This way I can run the load test on my web apps or services hosted from anywhere or at least from all the Azure regions.

Improvement Areas

With the above working as expected, I think there are few things that I can really improve on.

Automation

The entire work is manual and is error prone. It will be good to automate this entire process with less user intervention when it comes to execution. Writing load tests would be user responsibility though. Going forward, I would like to automate the creation of all these resources using Terraform and then execute the TF scripts automatically. If you want to do this for any of your Terraforms projects, refer my article on medium.

Visualization

After getting the test logs from the File Share, I also want to see what the load test results look like. As of now there is no tool I have to do so. But there are few I found on the GitHub that allow me to visualize the output of K6 load tests. Maybe I would write one or use an open-source, I am not sure about that at the moment.

I hope you enjoyed this article and learn one or more things related to Azure and K6. Here are few more resources that will be helpful.