In the past decade Marvel has gathered a lot of fans following which eventually led all the fans to know more about how the Marvel comics universe characters, stories, events are mapped or linked to each other.

In 2017 (if I am remembering it correctly), at Microsoft Build event, Microsoft announces Bot framework and did a demo with CosmosDB graph database which uses Gremlin query from Apache TinkerPop. It was a great demo, and I was really impressed by how they did it but unfortunately, I was unable to find the source code for that demo anywhere. So, I thought I should at least get the graph database for my own use and then sometime later will work on building a NLU bot.

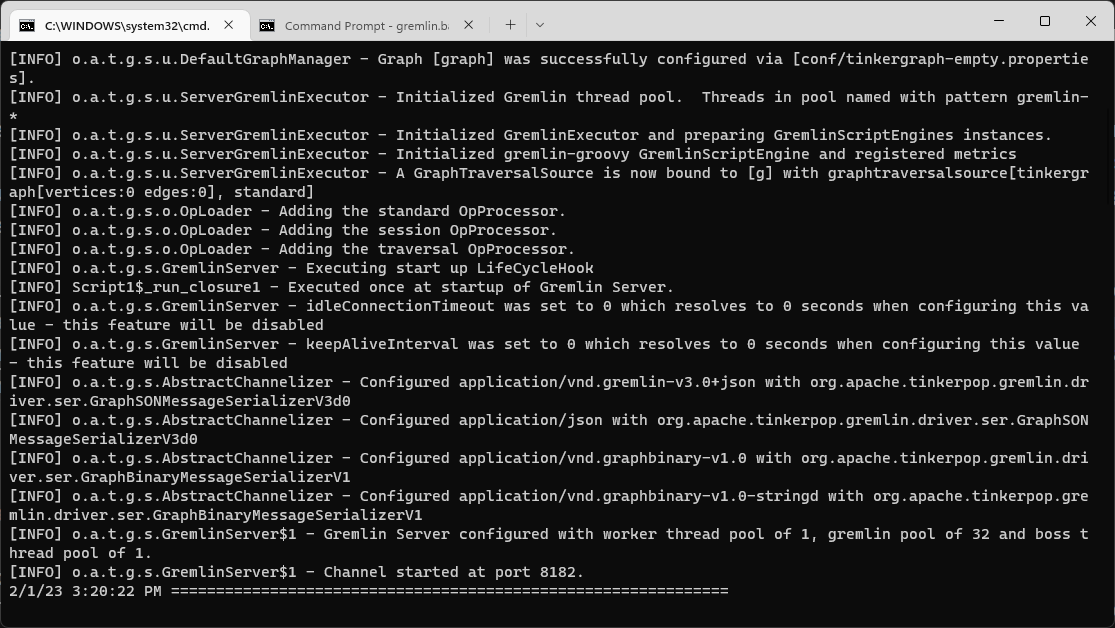

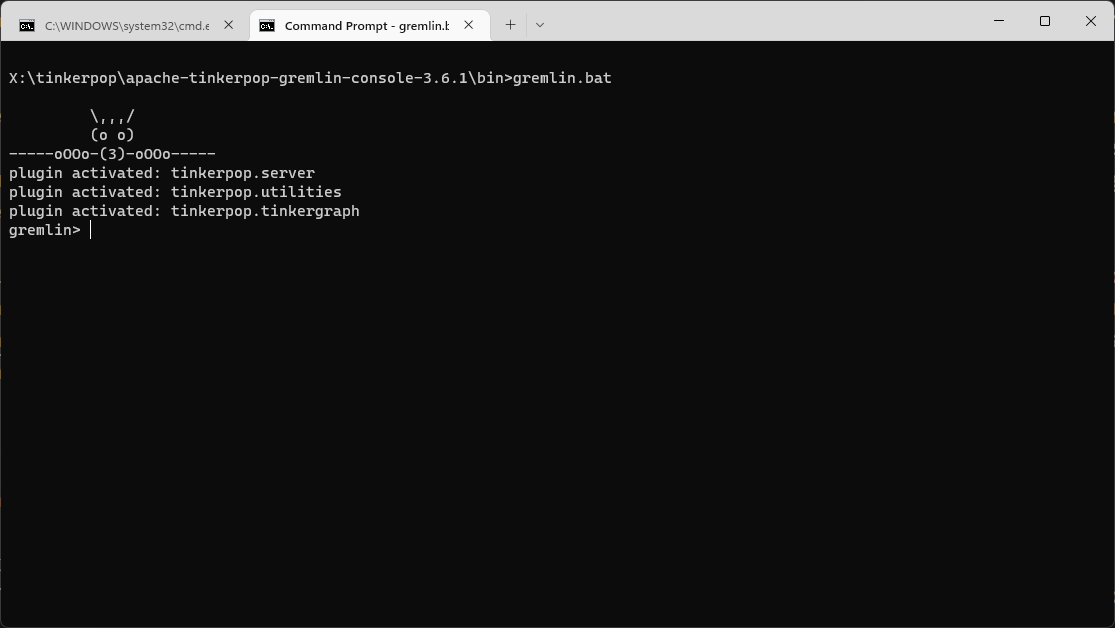

To get started I will be setting up the database using Apache TinkerPop and not CosmosDB due to cost and development speed. Will make use of Gremlin.NET - a wrapper around Gremlin Query Language. Let’s download Apache TinkerPop Gremlin Server and Console. These will be downloaded in .zip file, so extract them into a folder and navigate to the bin directory inside each extracted folder and using the command/shell prompt execute the .bat file if you’re on Windows else .sh file if you are on Linux or WSL on Windows.

After both the server and console are running, go to console terminal window and connect to the local TinkerPop server. Note that the console is independent of the server running on the local machine. If you are assuming that it will automatically connect to the running local instance of the server, then you are wrong. Therefore, we have to connect to the local server instance by executing the below command in the Gremlin console.

gremlin> :remote connect tinkerpop.server conf/remote.yaml session

If you are unable to connect to the local server instance, then it might be a problem with your config file (.yaml) which you can find under conf folder in your console folder. Here are the contents of my remote.yaml file.

hosts: [localhost]

port: 8182

serializer: { className: org.apache.tinkerpop.gremlin.driver.ser.GraphBinaryMessageSerializerV1, config: { serializeResultToString: true }}

With connection established, now we can import the graphson data in the database. You can also refer to my Github repo where I have used the raw data from the web to generate the edges and vertices for all Marvel characters. The raw data which is in csv format has some repetitive connections. The repo code ensures that there are no duplicate connections. You can also refer to the code if you plan to connect and play with TinkerPop graph database. Running the code and setting up the database will be a bit time-consuming, so I would suggest to download the mcu.json file from the repo and then importing it, which will happen in a few seconds.

The data file which you downloaded from the repo mcu.json should be stored on the Gremlin server current working directory. After you place the file, execute the below command on the Gremlin console.

gremlin> :> g.io("mcu.json").read().iterate()

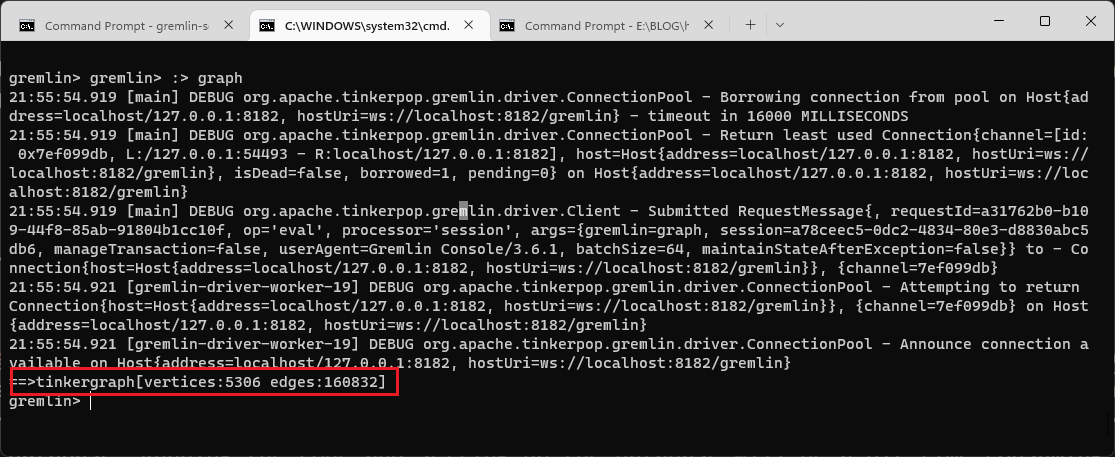

The above command will take a few seconds to execute and then it will return you back to the gremlin console. We can now verify the data by executing this command.

gremlin> :> graph

If all goes well, you should see the output below of the above command.

Let’s write some Gremlin queries and find out some information. Go to Gremlin console and test these below queries.

How many people does Cap know?

gremlin> :> g.V().has("name","CAPTAIN AMERICA").outE("knows").count()

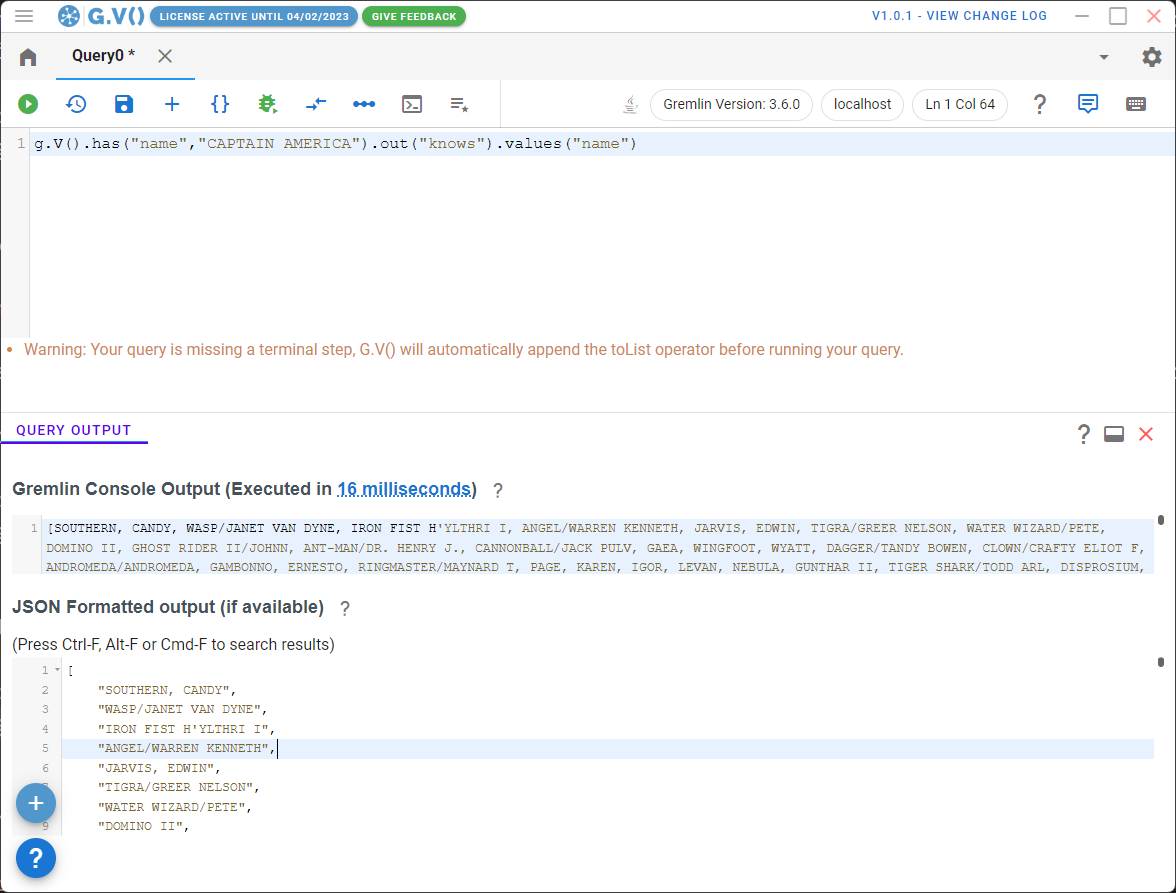

And who are those people?

gremlin> :> g.V().has("name","CAPTAIN AMERICA").out("knows").values("name")

Characters who have IRON in their name. Note that this is case-sensitive.

gremlin> :> g.V().has('name', containing('IRON')).values('name')

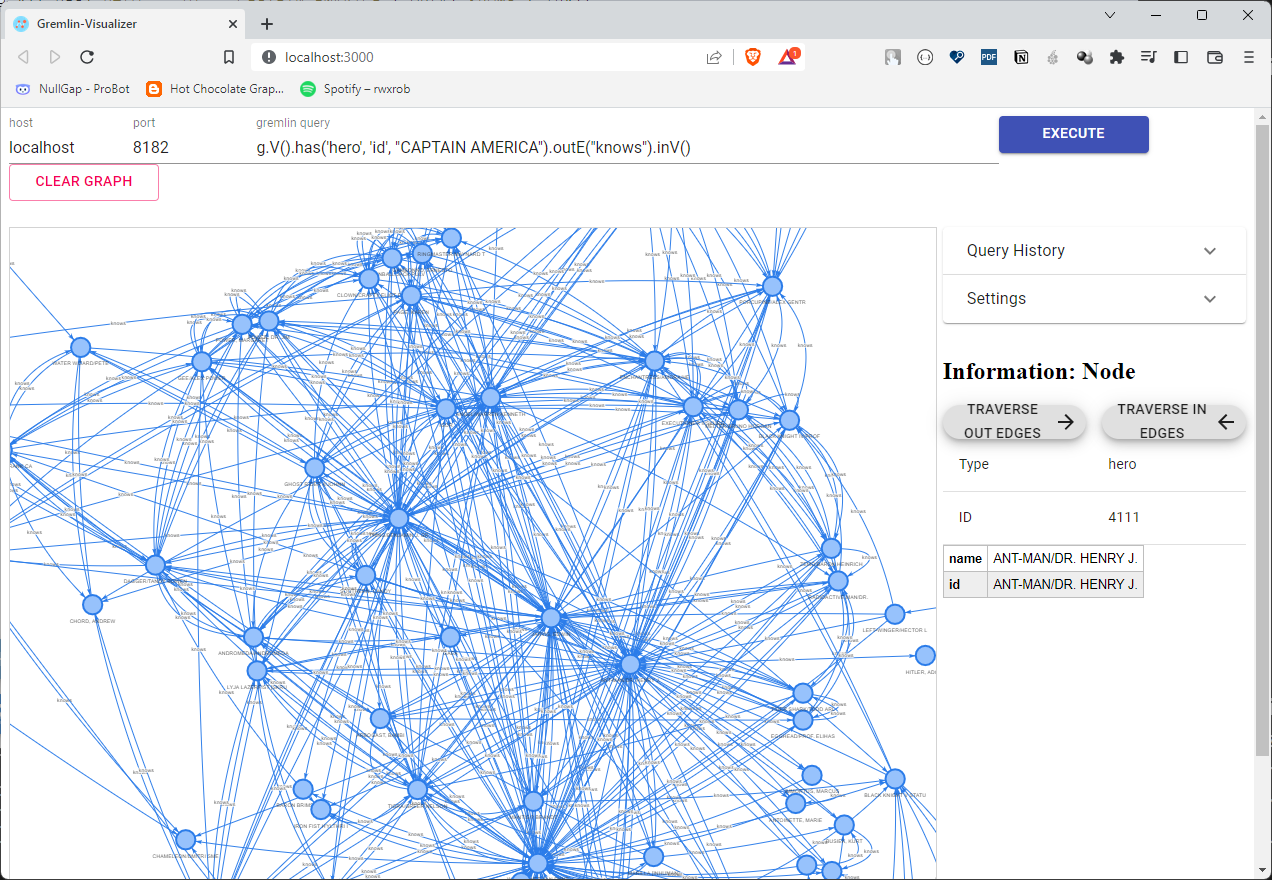

As this is a graph database, it will be nice if we can visualize the data visually. There are few visualization tools I have used so far and GDotV seems to a be popular one.

You can also visualize using this simple open-source graph visualization tool called gremlin-visualizer. There are other visualization tools as well in case you want to work with some advanced feature sets. I personally make use of the basic console and mix of gdotv gremlin-visualizer as it fits my needs. For example, you will be able to execute this query below and see the visualization in gremlin-visualizer but unable to get this query to execute in gdotv version 1.0.1.

g.V().has('hero', 'id', "CAPTAIN AMERICA").outE("knows").inV()

Reference & Resources

If you have ever got your hands on Azure Function or on AWS Lambda, then you might be familiar with the concept of serverless computing. So in order to test the concept of a function (serverless function), we have to have an Azure or an AWS account. The SDK from both the platforms allow you to build, develop and test the functions on your local development machine, but when it comes to production environment, you again have to turn yourself to Azure or AWS for hosting it. OpenFaaS is an open-source product developed by Alex Ellis which help developers to deploy event-driven functions, time triggered and microservices to Kubernetes without repetitive, boiler-plate coding. Let’s see how we can deploy OpenFaas on Ubuntu 20.04 LTS using k3s. You can also make use of another Kubernetes flavour which suites your needs.

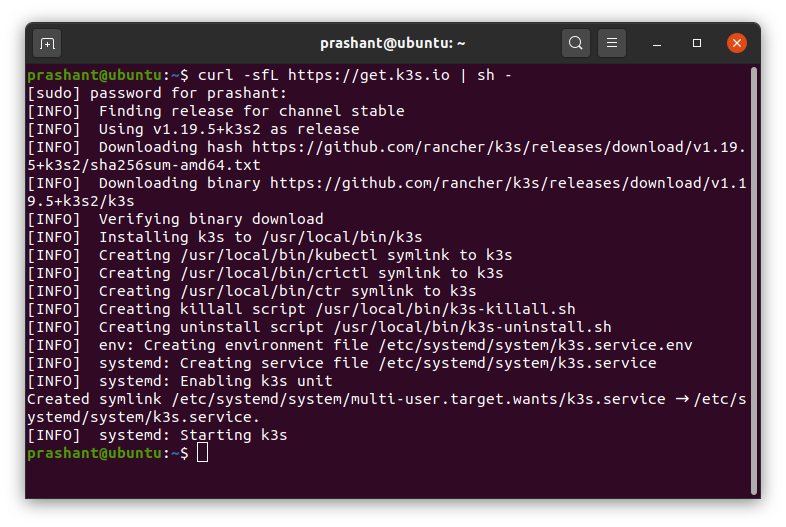

I am making use of Lightweight Kubernetes also known as k3s. This is a certified Kubernetes distribution built for IoT and Edge computing.

$ curl -sfL https://get.k3s.io | sh -

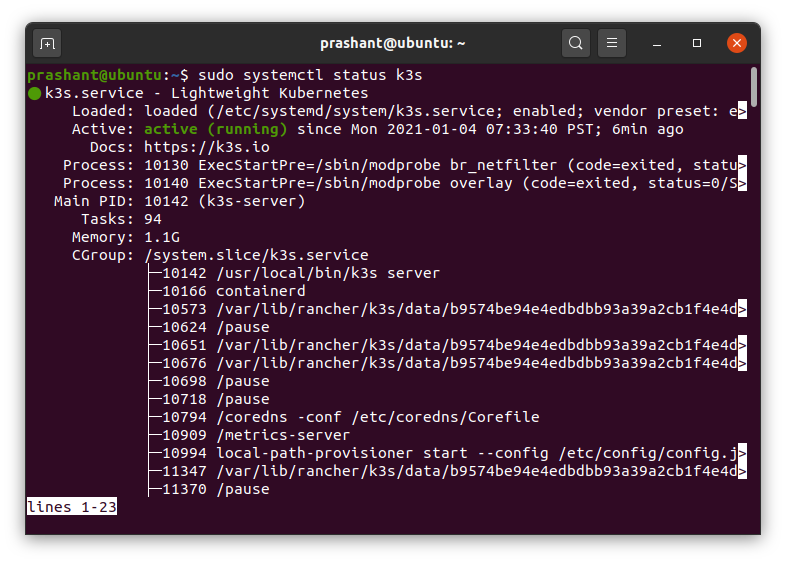

After the installation is completed, you can check if the k3s service is running by executing the below command.

$ sudo systemctl status k3s

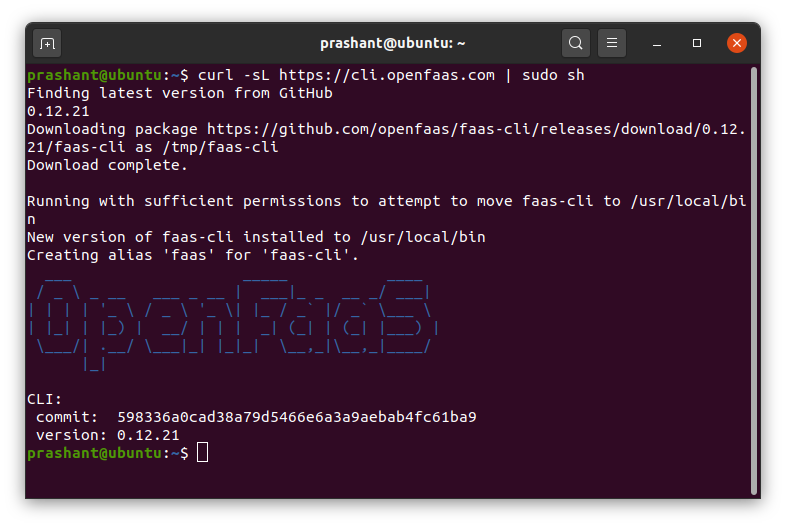

faas-cli is a command line utility which let you work with OpenFaaS.

$ curl -sL https://cli.openfaas.com | sudo sh

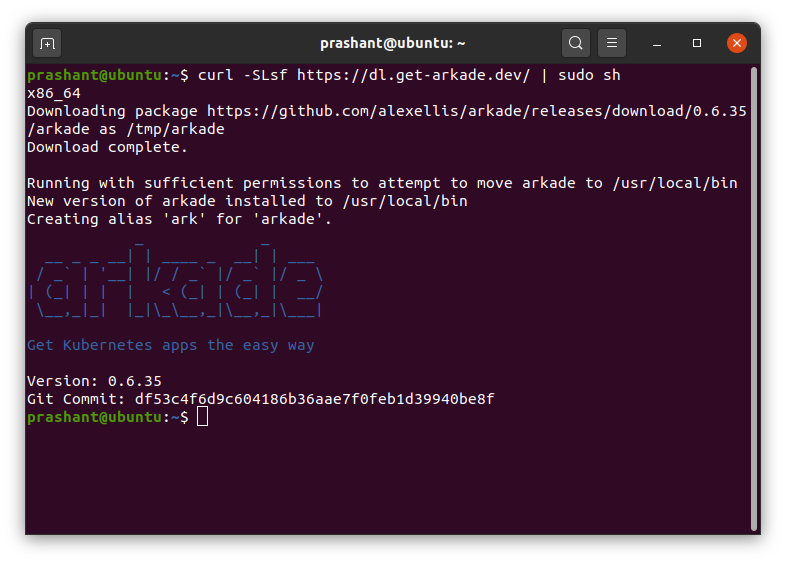

Arkade is a Kubernetes app installer. It allows you to install apps and charts to your cluster in one command. The official documentation of OpenFaaS states that using arkade is the fastest option. If you wish you can also make use of Helm to install but as I am installing it on a single node machine, I will stick with arkade option.

Run the below command to download and install it.

$ curl -SLsf https://dl.get-arkade.dev/ | sudo sh

Installing OpenFaaS with arkade is extremely simple and you can get the installation done by executing this command:

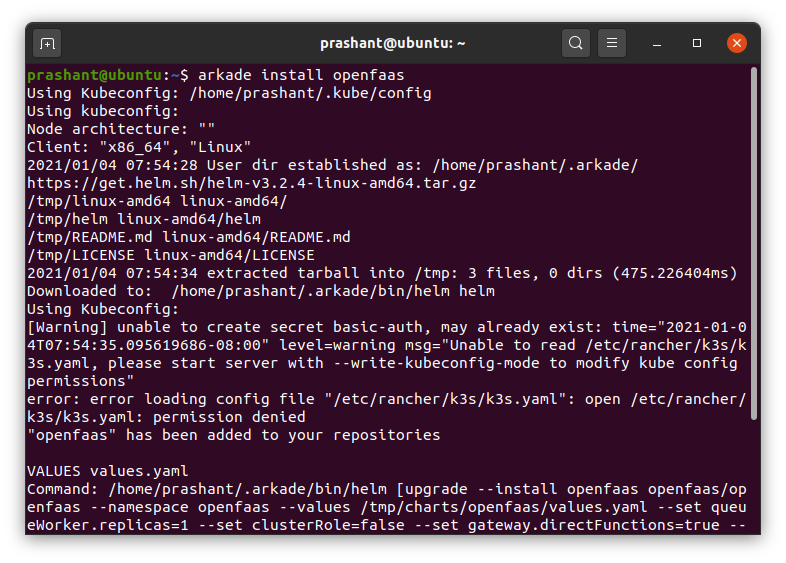

$ arkade install openfaas

This should install OpenFaaS but unfortunately on my machine this results into an error. Below are the two screenshots that I took when I got this error. The first screenshot says there is a permission error in /etc/rancher/k3s/k3.yaml. For the resolution of this error, I changed the permission on the file to 744 and the error goes away.

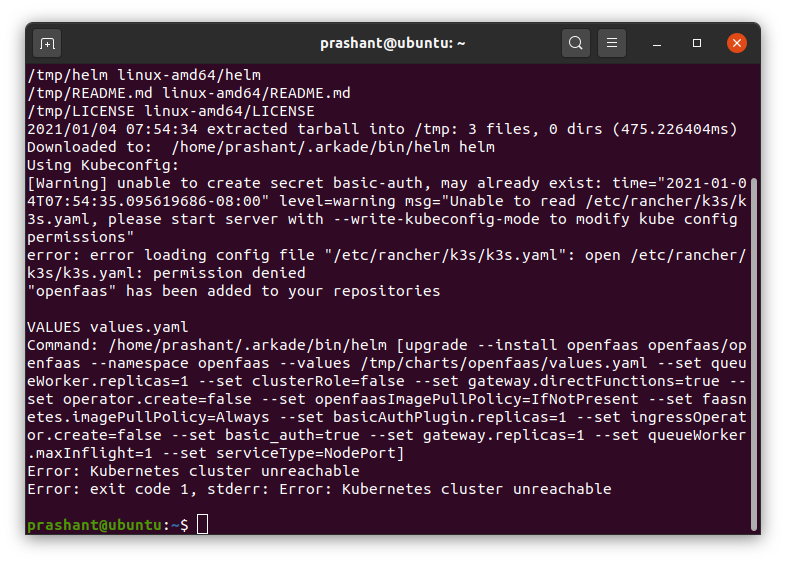

If you scroll down to the end of the command you will notice that there is another error which states that Kubernetes cluster unreachable.

Even knowing that k3s service is up and running I was not able to figure out what could be a problem. So I searched the web and found this issue thread on Github in which one of the commenters were able to fix this error by running the below command.

$ kubectl config view --raw > ~/.kube/config

I tried this installation on Raspberry PI 4 running Raspbian buster x64 and there as well I saw these error messages.

After this, you can run the installation of OpenFaaS using arkade again and this time it should be successfull. Here is the output on my system after the installation was successfull.

prashant@ubuntu:~$ arkade install openfaas

Using Kubeconfig: /etc/rancher/k3s/k3s.yaml

Using kubeconfig:

Node architecture: "amd64"

Client: "x86_64", "Linux"

2021/01/04 08:34:42 User dir established as: /home/prashant/.arkade/

Using Kubeconfig:

[Warning] unable to create secret basic-auth, may already exist: Error from server (AlreadyExists): secrets "basic-auth" already exists

"openfaas" has been added to your repositories

VALUES values.yaml

Command: /home/prashant/.arkade/bin/helm [upgrade --install openfaas openfaas/openfaas --namespace openfaas --values /tmp/charts/openfaas/values.yaml --set clusterRole=false --set operator.create=false --set gateway.replicas=1 --set queueWorker.replicas=1 --set basic_auth=true --set serviceType=NodePort --set gateway.directFunctions=true --set openfaasImagePullPolicy=IfNotPresent --set faasnetes.imagePullPolicy=Always --set basicAuthPlugin.replicas=1 --set ingressOperator.create=false --set queueWorker.maxInflight=1]

Release "openfaas" does not exist. Installing it now.

NAME: openfaas

LAST DEPLOYED: Mon Jan 4 08:34:45 2021

NAMESPACE: openfaas

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

To verify that openfaas has started, run:

kubectl -n openfaas get deployments -l "release=openfaas, app=openfaas"

=======================================================================

= OpenFaaS has been installed. =

=======================================================================

# Get the faas-cli

curl -SLsf https://cli.openfaas.com | sudo sh

# Forward the gateway to your machine

kubectl rollout status -n openfaas deploy/gateway

kubectl port-forward -n openfaas svc/gateway 8080:8080 &

# If basic auth is enabled, you can now log into your gateway:

PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

faas-cli store deploy figlet

faas-cli list

# For Raspberry Pi

faas-cli store list \

--platform armhf

faas-cli store deploy figlet \

--platform armhf

# Find out more at:

# https://github.com/openfaas/faas

Thanks for using arkade!

The output contains important information which we need to get started with faas-cli and OpenFaaS. As we have already install faas-cli, we will now need to forward the gateway to the machine.

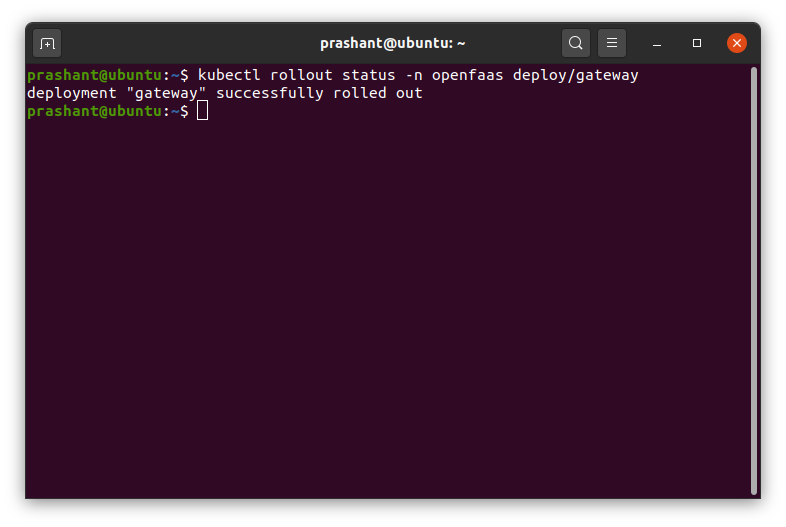

First we will check the rollout status of the gateway by issuing the below command:

$ kubectl rollout status -n openfaas deploy/gateway

The above command should state that it is successfull. After this we can forward the gateway to the machine.

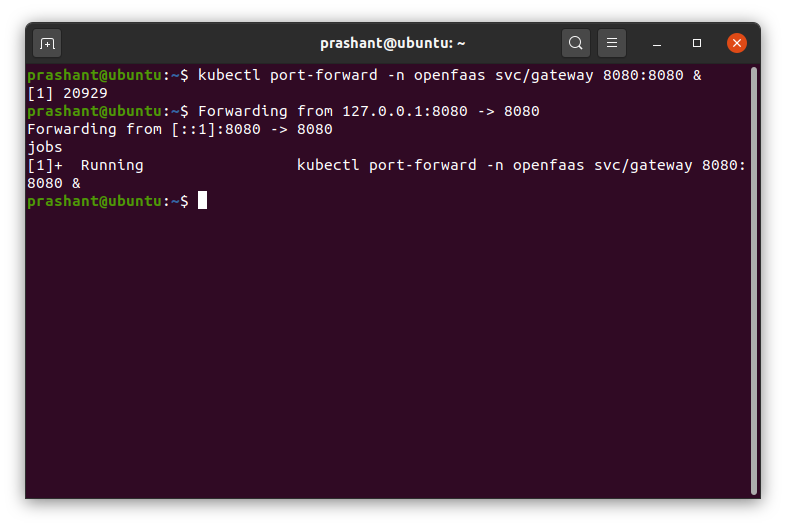

$ kubectl port-forward -n openfaas svc/gateway 8080:8080 &

The & sign in the end of the command will execute it in the background. You can type in jobs to check the status of the job.

We also need to check if the deployment is in ready state or not. To check the deployment state execute the command:

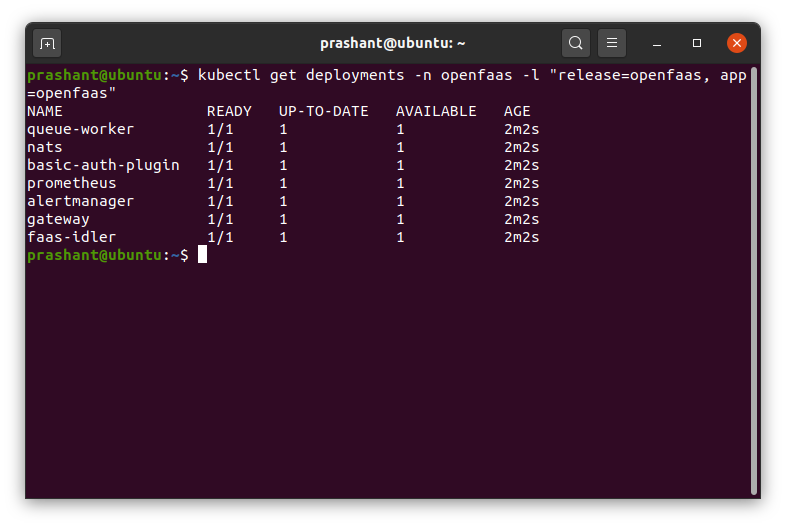

$ kubectl get deployments -n openfaas -l "release=openfaas, app=openfaas"

If any of the app deployed is not ready, you should be able to see it now. Check the READY column in the command output and you should see something 0/1. This would mean that the deployment is not ready and you should check back in sometime. Once you have the output like the one in the screenshot above, you are good to go.

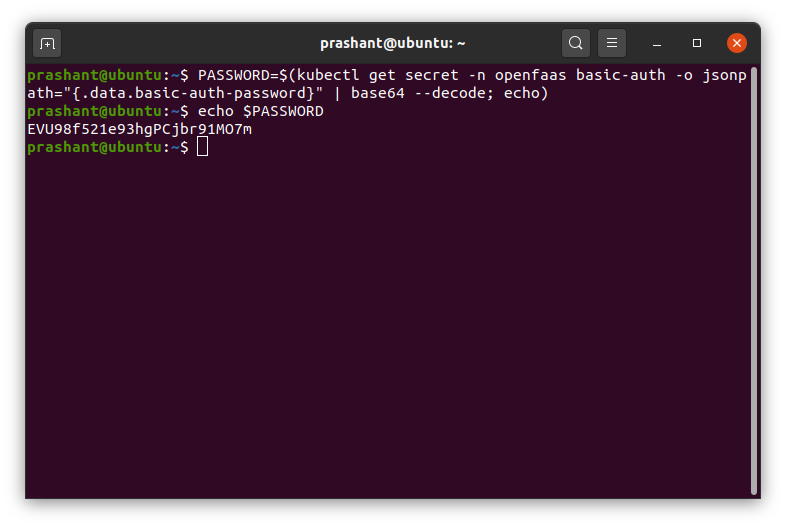

Now the last step is to acquire the password for OpenFaaS UI which we will use to manage OpenFaaS. To do that execute the below command:

$ PASSWORD=$(kubectl get secret -n openfaas basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode; echo)

echo -n $PASSWORD | faas-cli login --username admin --password-stdin

You can then view the password by printing the value of the PASSWORD variable using the echo command.

$ echo PASSWORD

Copy and save the password securely.

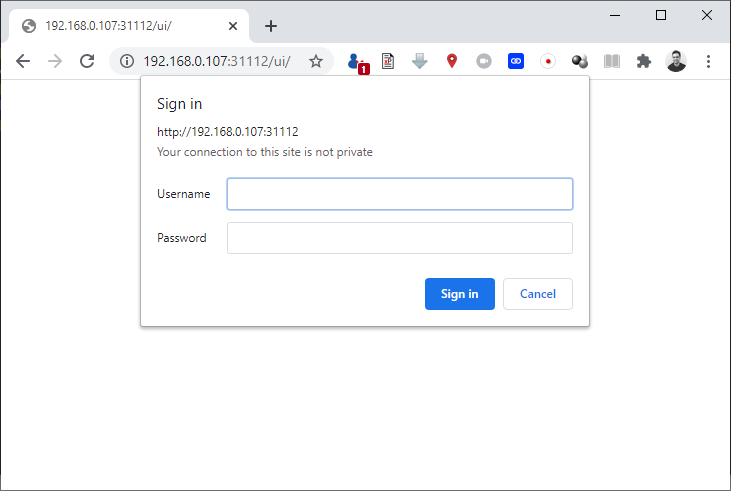

Now let’s try accessing the OpenFaaS UI (OpenFaaS Portal) by navigating to the http://localhost:31112/ui or by using the IP address of machine instead of localhost if you are accessign it from another machine on your network.

Username is set to admin by default and the password is the one which you saved in the above step.

Click on the Deploy New Function link located at the center of the home page or at the left side bar. You will be able to see the list of the different functions which you can deploy by just selecting the function from the list and then clicking the Deploy button at the bottom of the dialog.

Note that the list of functions that you see in the

Deploy new Functiondialog will be different on Raspberry PI as OpenFaaS will only list the functions which are available for the platform on which it is running.

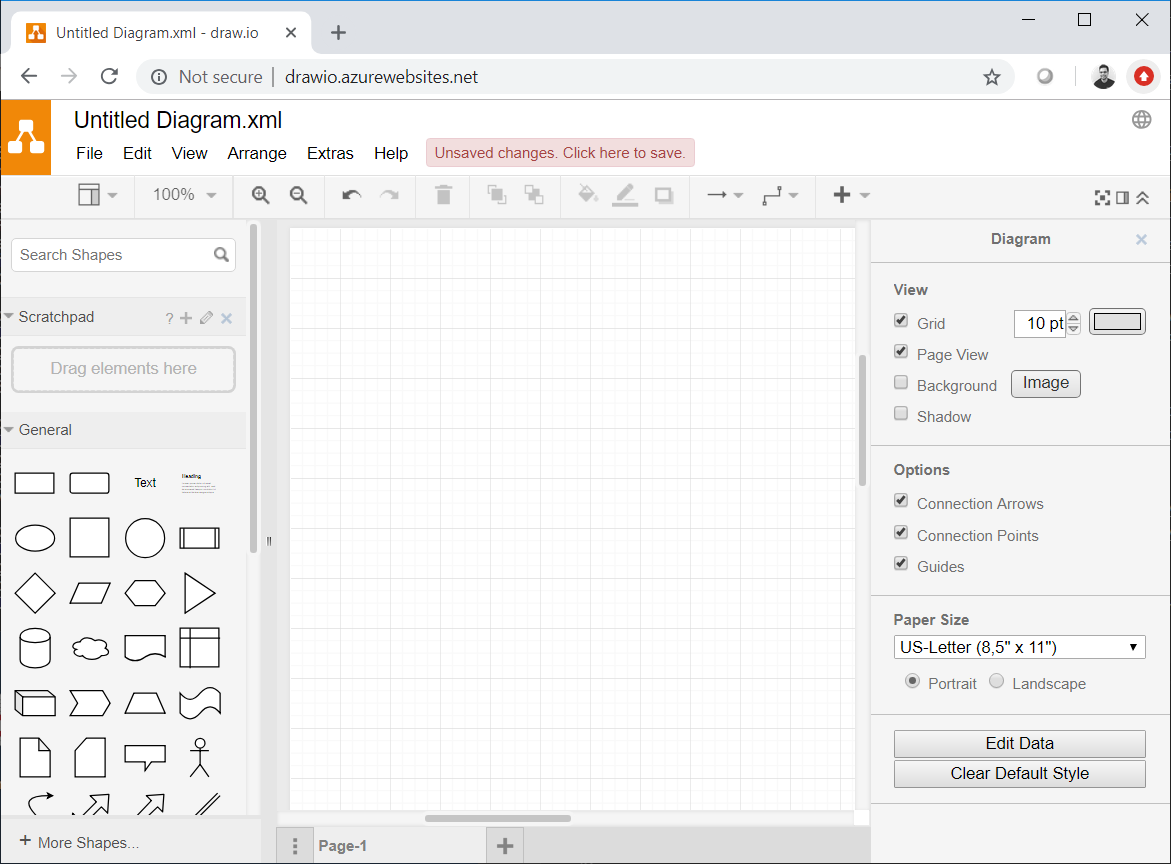

Draw.IO is a great alternative for Microsoft Visio. It allows me to quickly draw flowcharts and diagrams. I have been using this for a long time and the best part is that Draw.IO is open source on Github with web and offline (desktop) version. I personally use desktop version but what if I would like to host it in my own org or would like to work with the web instance instead of the desktop version.

The problem that I would be facing with the web version is that, it has some part of it written in Java. Fortunately, Draw.IO has an image hosted at docker hub form where I can pull the image and get it to work in no time. Instead of using the image on my local machine, I will try Web Apps for Containers in Azure. You may also know it as App Service in Azure.

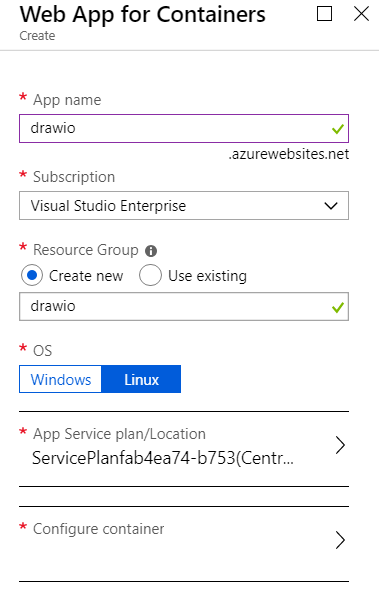

To get started with Web Apps for Containers in Azure. Create a new resource in Azure portal and then search for Web Apps for Containers.

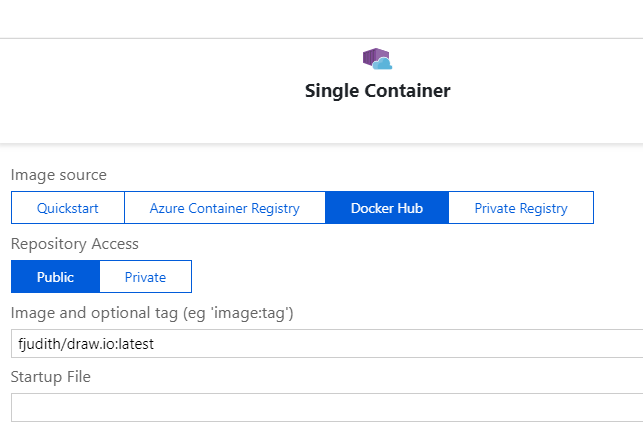

Give a name of your web app and fill in other information. For the OS, I have selected Linux. The next step is to select the official draw.io image from docker hub.

You can also select the image you want to host by providing the tag name. By default, it would pick up the latest even if you don’t provide it. You can check the different tags for draw.io and select the one you want to use. After selecting the right image with the tag, click Apply button to select the image for the container. The click Create button to create the web app with this container.

Note that there is no Standard pricing tier available when you select Web Apps for Container. It makes use of the Premium tier to run the containers in and as PaaS offering.

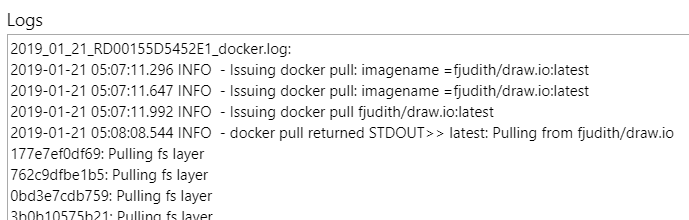

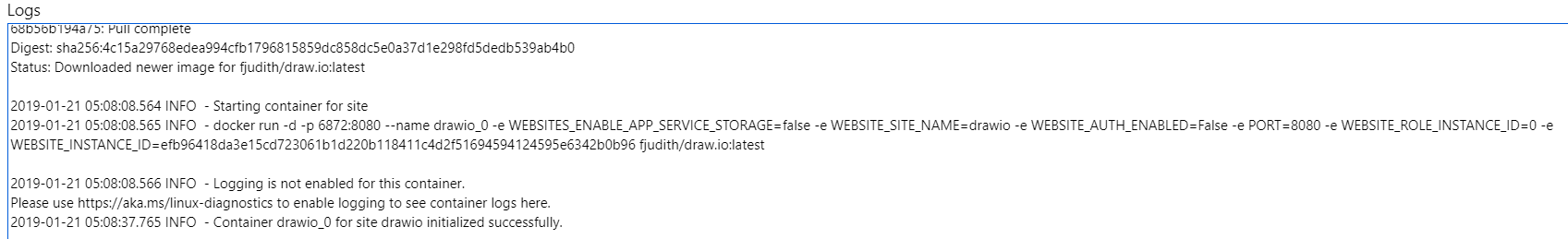

After the web app has been created successfully, you can navigate to the Container settings section under Settings section quickly. If you were quick, you will be able to see the logs in real-time and also see how the image is being pulled from docker hub and getting started. Below are the screenshots of the logs generated by my web app.

You can clearly see in the logs that how the docker pull command was initiated and the command to start the container was executed. You can now navigate to the url of your web app to see the application in action.

When you visit the web app for the first time, it might take few extra seconds to load completely. This is a one time delay and after that the app will open up instantaneously.

Recently I moved from Windows to Ubuntu on one of my laptops and since then I was trying to look for the way to create a VHDs or some sort of image files that I can use as a container. So I looked up for the solution and found a working solution scattered though places. So here I am putting it in a blog post to have all it in one place.

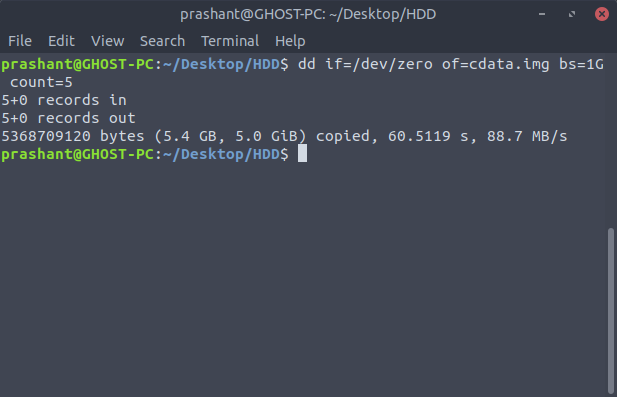

First we need to create an image file (.img) by using the dd command. The dd command is used to copy and convert the file. The reason it is not called cc is because it is already being used by C compiler. Here goes the command.

$ dd if=/dev/zero of=cdata.img bs=1G count=5

Note: The command execution time depends on the size of the image you have opted out for.

The if param stands for input file to refer the input file. As if, of stands for output file and this will create the .img file with the name you specify. The third param bs stands for block size. This will let you set the size of the image file. The G in the above command stands for GigaByte (GB). You can set the image size by specifying the block size. In this case G stands for GigaBytes (GB), K for KiloBytes (KB), T for TeraBytes (TB), P for Petabytes (PB) and so on. Here I am creating a 5 blocks of 1 GB each for the image file name cdata.

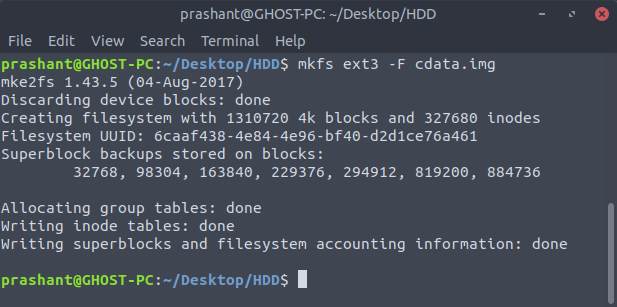

This command will create the .img file but you cannot mount this file as of yet. To do that you have to format the image so that it can have a file system. To do this make use of mkfs command.

$ mkfs ext3 -F cdata.img

I am creating an ext3 filesystem on my image file. -F is to force the operation. Here is the output of the command.

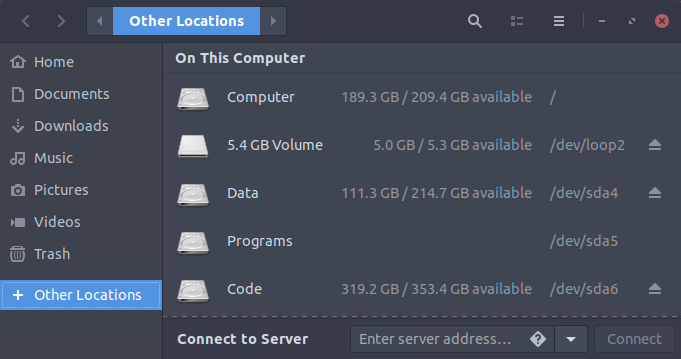

Now we can mount the image file by using the mount command:

$ sudo mount -o loop cdata.img ~/Desktop/HDD/

With the success of the above command, you can see the image mounted on your desktop. You can unmount the image by clicking the eject or unmount button as shown in the below screenshot or you can execute the umount command to do that.

Unmounting the image by using command line.

$ sudo umount ~/Desktop/HDD

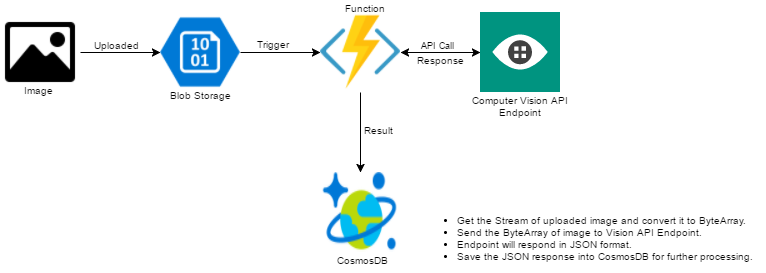

I started to work on a project which is a combination of lot of intelligent APIs and Machine Learning stuff. One of the things I have to accomplish is to extract the text from the images that are being uploaded to the storage. To accomplish this part of the project I planned to use Microsoft Cognitive Service Computer Vision API. Here is the extract of it from my architecture diagram.

Let’s get started by provisioning a new Azure Function.

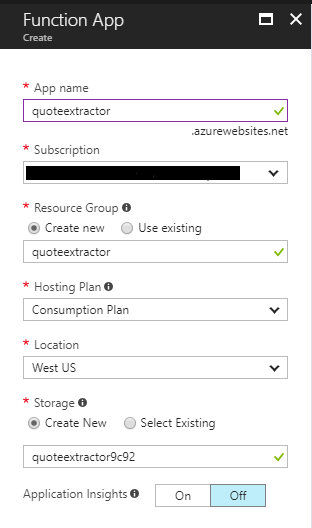

I named my Function App as quoteextractor and selected the Hosting Plan as Consumption Plan instead of App Service Plan. If you choose Consumption Plan you will be billed only what you use or whenever your function executes. On the other hand, if you choose App Service Plan you will be billed monthly based on the Service Plan you choose even if your function executes only few times a month or not even executed at all. I have selected the Location as West US because the Cognitive Service subscription I have has the endpoint for the API from West US. I then also created a new Storage Account. Click on Create button to create the Function App.

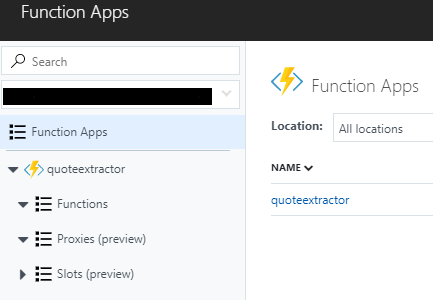

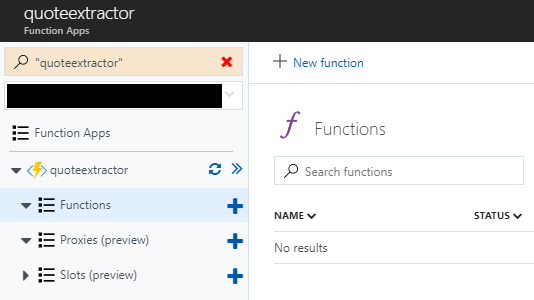

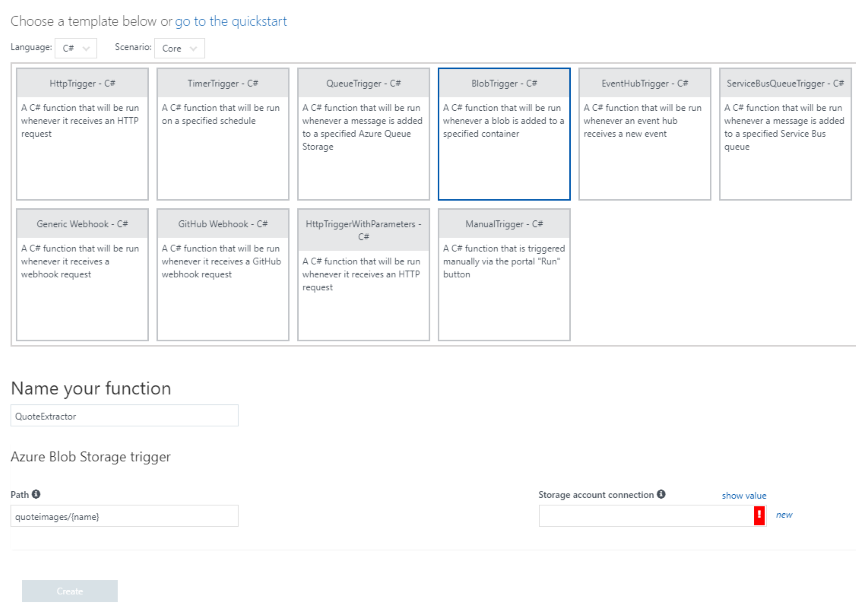

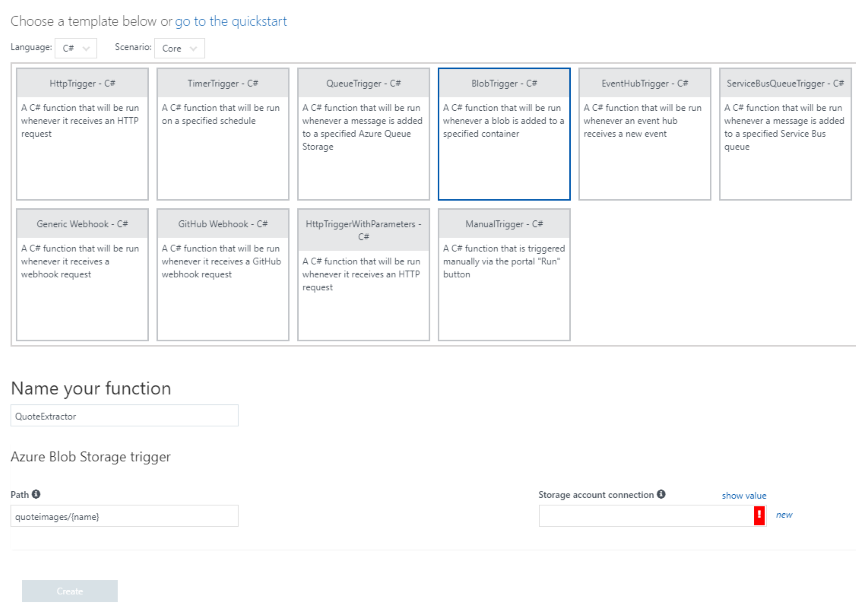

After the Function App is created successfully, create a new function in the Function App quoteextractor. Click on the Functions on the left hand side and then click New Function on the right side window to create a new function as shown in the below screenshot.

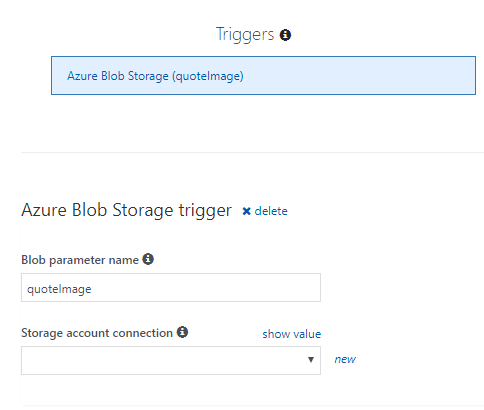

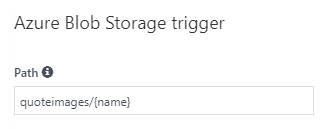

The idea is to trigger the function whenever a new image is added/uploaded to the blob storage. To filter down the list of templates, select C# as the Language and then select BlobTrigger - C# from the template list. I have also change the name of the function to QuoteExtractor. I have also changed the Path parameter of Azure Blob Storage trigger to have quoteimages. The quoteimages is the name of the container where the function will bind itself and whenever a new image or item is added to the storage it will get triggered.

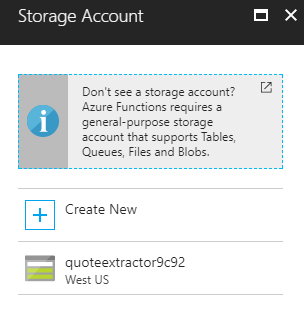

Now change the Storage account connection which is basically a connection string for your storage account. To create a new connection click on the new link and select the storage account you want your function to get associated with. The storage account I am selecting is the same one which got crated at the time of creating the Function App.

Once you select the storage account, you will be able to see the connection key in the Storage account connection dropdown. If you want to view the full connection string then click the show value link.

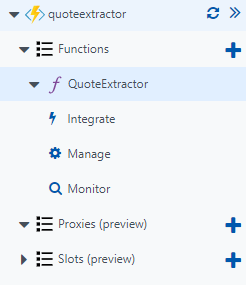

Click the Create button to create the function. You will now be able to see your function name under the Functions section. Expand your function and you will see three other segments named Integrate, Manage and Monitor.

Click on Integrate and under Triggers update the Blob parameter name from myBlob to quoteImage or whatever the name you feel like having. This name is important as this is the same parameter I will be using in my function. Click Save to save the settings.

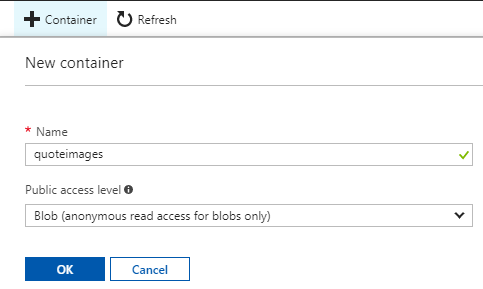

The storage account which is created at the time of creating the Function App, still does not have a container. Add a container with the name which is used in the path of the Azure Blob Storage trigger which is quotesimages.

Make sure you select the Public access level to Blob. Click OK to create a container in your storage account.

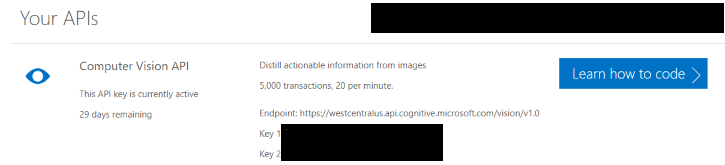

With this step, you should be done with all the configuration which are required for Azure Function to work properly. Now let’s get the Computer Vision API subscription. Go to https://azure.microsoft.com/en-in/try/cognitive-services/ and under Vision section click Get API Key which is next to Computer Vision API. Agree with all the terms and conditions and continue. Login with any of the preferred account to get the subscription ready. Once everything went well, you will see your subscription key and endpoint details.

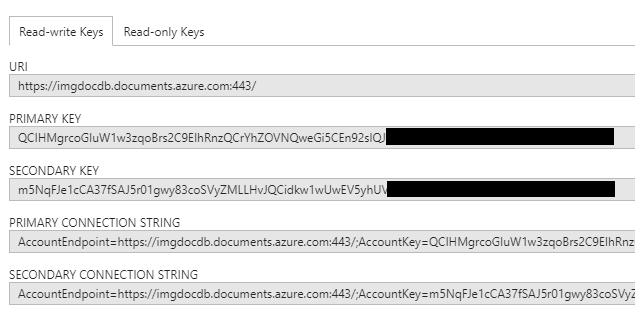

The result which I am going to get as a response from the API is in JSON format. Either I can parse the JSON request in the function itself or I can save it directly in the CosmosDB or any other persistent storage. I am going to put all the response in CosmosDB in raw format. This is an optional step for you as the idea is to see how easy it is to use Cognitive Services Computer Vision API with Azure Functions. If you are skipping this step, the you have to tweak the code a bit so that you can see the response in the log window of your function.

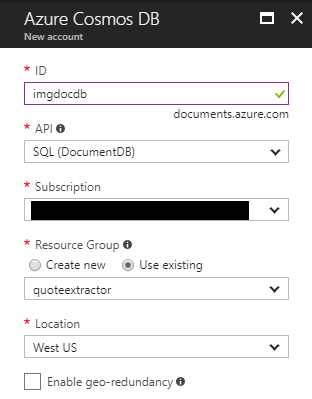

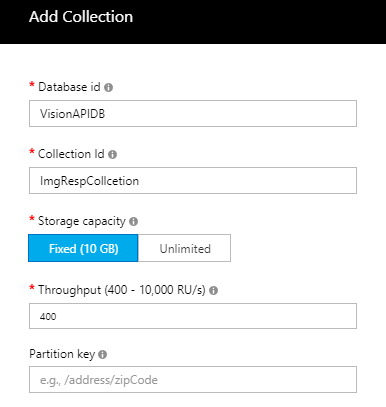

Provision a new CosmosDB and add a collection to it. By default there is a database named ToDoList and with a collection called Items that you can create soon after provisioning of the CosmosDB from the getting started page. If you want you can also create a new database and add a new collection which make more sense.

To create a new database and collection go to Data Explorer and click on New Collection. Enter the details and click OK to create a database and a collection.

Now we have everything ready. let’s get started with the CODE!!.

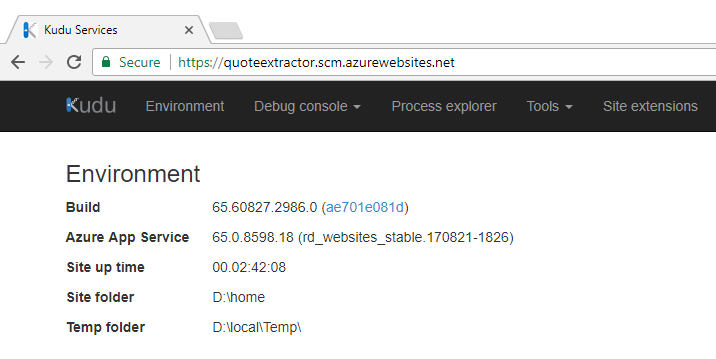

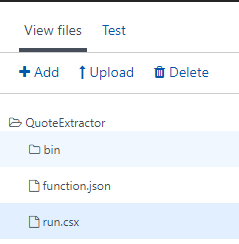

Let’s start by creating a new function called SaveResponse which takes the API response as one of the parameters and is of type string. In this method I am going to use the Azure DocumentDB library to communicate with CosmosDB. This means I have to add this library as a reference to my function. To add this library as a reference, navigate to the KUDU portal by adding scm in the URL of your function app like so: https://quoteextractor.scm.azurewebsites.net/. This will open up the KUDU portal.

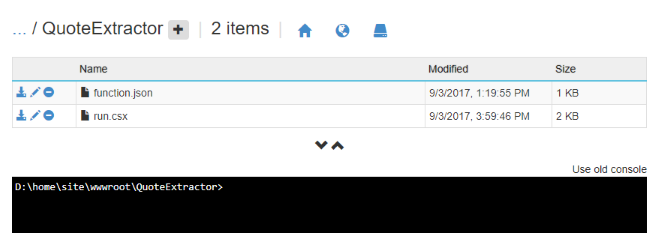

Go to Debug console and click CMD. Navigate to site/wwwroot/<Function Name>. In the command window use the command mkdir to create a folder name bin.

mkdir bin

You can now see the bin folder in the function app directory. Click the bin folder and upload the lib(s).

In the very first line of the function use #r directive to add the reference of external libraries or assemblies. Now you can use this library in the function with the help of using directive.

#r "D:\home\site\wwwroot\QuoteExtractor\bin\Microsoft.Azure.Documents.Client.dll"

Right after adding the reference of the DocumentDB assembly, add namespaces with the help of using directive. In the later stage of the function, you will also need to make HTTP POST calls to the API endpoint and therefore make use of the System.Net.Http namespace as well.

using Microsoft.Azure.Documents; using Microsoft.Azure.Documents.Client; using System.Net.Http; using System.Net.Http.Headers;

The code for the SaveResponse function is very simple and just make use of the DocumentClient class to create a new document for the response we receive from the Vision API. Here is the complete code.

private static async Task<bool> SaveResponse(string APIResponse)

{

bool isSaved = false;

const string EndpointUrl = "https://imgdocdb.documents.azure.com:443/";

const string PrimaryKey = "QCIHMgrcoGIuW1w3zqoBrs2C9EIhRnxQCrYhZOVNQweGi5CEn94sIQJOHK3NleFYDoFclB7DwhYATRJwEiUPag==";

try

{

DocumentClient client = new DocumentClient(new Uri(EndpointUrl), PrimaryKey);

await client.CreateDocumentAsync(UriFactory.CreateDocumentCollectionUri("VisionAPIDB", "ImgRespCollcetion"), new {APIResponse});

isSaved = true;

}

catch(Exception)

{

//Do something useful here.

}

return isSaved;

}

Notice the database and collection name in the function CreateDocumentAsync where the CreateDocumentCollectionUri will return the endpoint uri based of the database and collection name. The last parameter new {ApiResponse} is the response that is received from the Vision API.

Create a new function and call it ExtractText. This function will take Stream object of the image that we can easily get from the Run function. This method is responsible to get the stream object and convert it into byte array with the help of another method ConvertStreamToByteArray and then send the byte array to Vision API endpoint to get the response. Once the response is successful from the API, I will save the response in CosmosDB. Here is the complete code for the function ExtractText.

private static async Task<string> ExtractText(Stream quoteImage, TraceWriter log)

{

string APIResponse = string.Empty;

string APIKEY = "b33f562505bd7cc4b37b5e44cb2d2a2b";

string Endpoint = "https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/ocr";

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

Endpoint = Endpoint + "?language=unk&detectOrientation=true";

byte[] imgArray = ConvertStreamToByteArray(quoteImage);

HttpResponseMessage response;

try

{

using(ByteArrayContent content = new ByteArrayContent(imgArray))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(Endpoint, content);

APIResponse = await response.Content.ReadAsStringAsync();

log.Info(APIResponse);

//TODO: Perform check on the response and act accordingly.

}

}

catch(Exception)

{

log.Error("Error occured");

}

return APIResponse;

}

There are few things in the above function which need out attention. You will get the subscription key and the endpoint when you register for the the Cognitive Services Computer Vision API. Notice the endpoint I am using also had ocr in the end which is important as I want to read the text from the images I am uploading to the storage. The other parameters I am passing is the language and detectOrientation. Language has the value as unk which stands for unknown which in turn tells the API to auto-detect the language and detectOrientation checks text orientation in the image. The API respond in JSON format which this method returns back in the Run function and this output becomes the input for SaveResponse method. Here is the code for ConvertStreamtoByteArray metod.

private static byte[] ConvertStreamToByteArray(Stream input)

{

byte[] buffer = new byte[16*1024];

using (MemoryStream ms = new MemoryStream())

{

int read;

while ((read = input.Read(buffer, 0, buffer.Length)) > 0)

{

ms.Write(buffer, 0, read);

}

return ms.ToArray();

}

}

Now comes the method where all the things will go into, the Run method. Here is how the `Run` method looks like.

public static async Task<bool> Run(Stream quoteImage, string name, TraceWriter log)

{

string response = await ExtractText(quoteImage, log);

bool isSaved = await SaveResponse(response);

return isSaved;

}

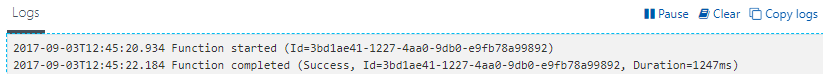

I don’t think this method needs any kind of explanation. Let’s execute the function by adding a new image to the storage and see if we are able to see the log in the Logs window and a new document in the CosmosDB. Here is the screenshot of the Logs window after the function gets triggered when I uploaded a new image to the storage.

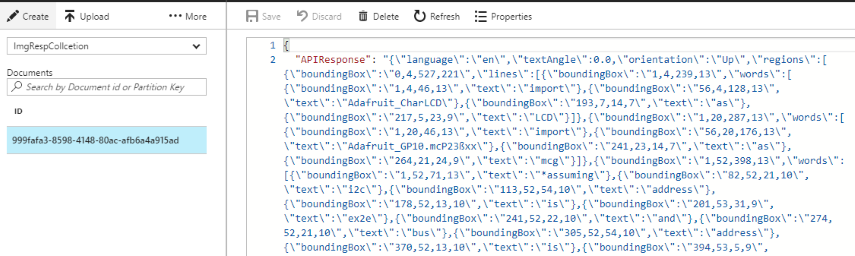

In CosmosDB, I can see a new document added to the collection. To view the complete document navigate to Document Explorer and check the results. This is how my view looks like:

To save you all a little time, here is the complete code.

#r "D:\home\site\wwwroot\QuoteExtractor\bin\Microsoft.Azure.Documents.Client.dll"

using System.Net.Http.Headers;

using System.Net.Http;

using Microsoft.Azure.Documents;

using Microsoft.Azure.Documents.Client;

public static async Task<bool> Run(Stream quoteImage, string name, TraceWriter log)

{

string response = await ExtractText(quoteImage, log);

bool isSaved = await SaveResponse(response);

return isSaved;

}

private static async Task<string> ExtractText(Stream quoteImage, TraceWriter log)

{

string APIResponse = string.Empty;

string APIKEY = "b33f562505bd7cc4b37b5e44cb2d2a2b";

string Endpoint = "https://westcentralus.api.cognitive.microsoft.com/vision/v1.0/ocr";

HttpClient client = new HttpClient();

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", APIKEY);

Endpoint = Endpoint + "?language=unk&detectOrientation=true";

byte[] imgArray = ConvertStreamToByteArray(quoteImage);

HttpResponseMessage response;

try

{

using(ByteArrayContent content = new ByteArrayContent(imgArray))

{

content.Headers.ContentType = new MediaTypeHeaderValue("application/octet-stream");

response = await client.PostAsync(Endpoint, content);

APIResponse = await response.Content.ReadAsStringAsync();

//TODO: Perform check on the response and act accordingly.

}

}

catch(Exception)

{

log.Error("Error occured");

}

return APIResponse;

}

private static async Task<bool> SaveResponse(string APIResponse)

{

bool isSaved = false;

const string EndpointUrl = "https://imgdocdb.documents.azure.com:443/";

const string PrimaryKey = "QCIHMgrcoGIuW1w3zqoBrs2C9EIhRnzZCrYhZOVNQweIi5CEn94sIQJOHK2NkeFYDoFcpB7DwhYATRJwEiUPbg==";

try

{

DocumentClient client = new DocumentClient(new Uri(EndpointUrl), PrimaryKey);

await client.CreateDocumentAsync(UriFactory.CreateDocumentCollectionUri("VisionAPIDB", "ImgRespCollcetion"), new {APIResponse});

isSaved = true;

}

catch(Exception)

{

//Do something useful here.

}

return isSaved;

}

private static byte[] ConvertStreamToByteArray(Stream input)

{

byte[] buffer = new byte[16*1024];

using (MemoryStream ms = new MemoryStream())

{

int read;

while ((read = input.Read(buffer, 0, buffer.Length)) > 0)

{

ms.Write(buffer, 0, read);

}

return ms.ToArray();

}

}